Designing Learning Methods for Health that are Robust, Private, and Fair

We work on robust machine learning model that can efficiently and accurately model events from healthcare data, and investigate best practices for multi-source integration, and learning domain appropriate representations.

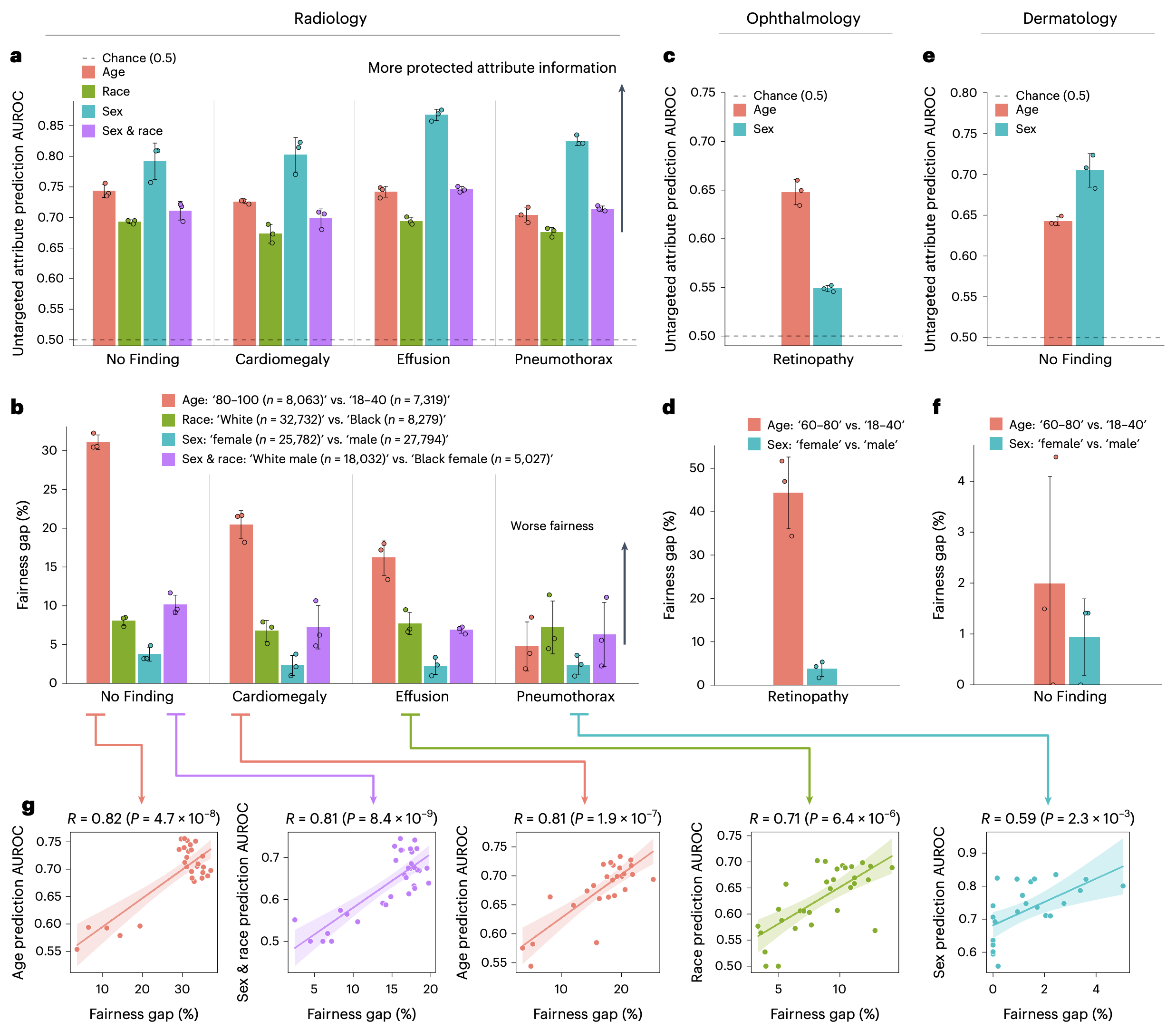

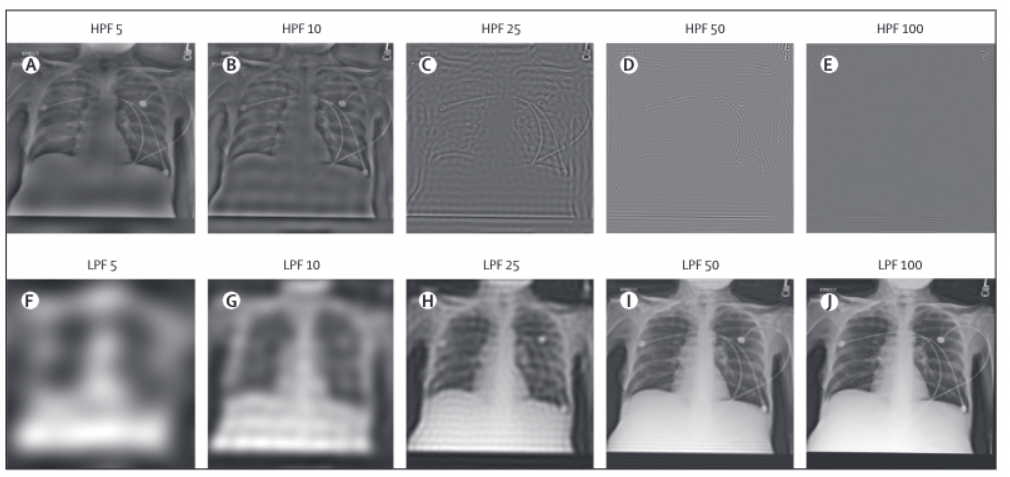

The Limits of Fair Medical Imaging AI in Real-World Generalization

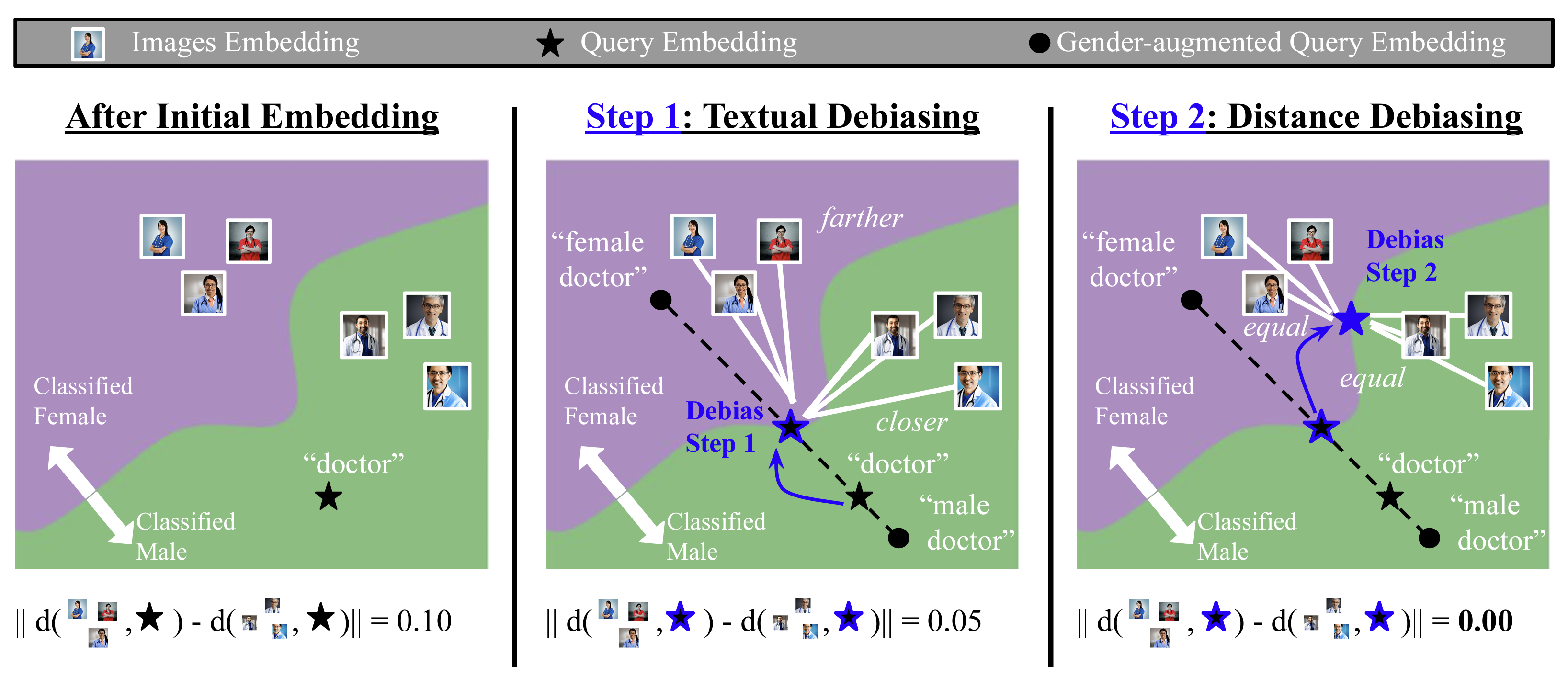

BendVLM: Test-Time Debiasing of Vision-Language Embeddings

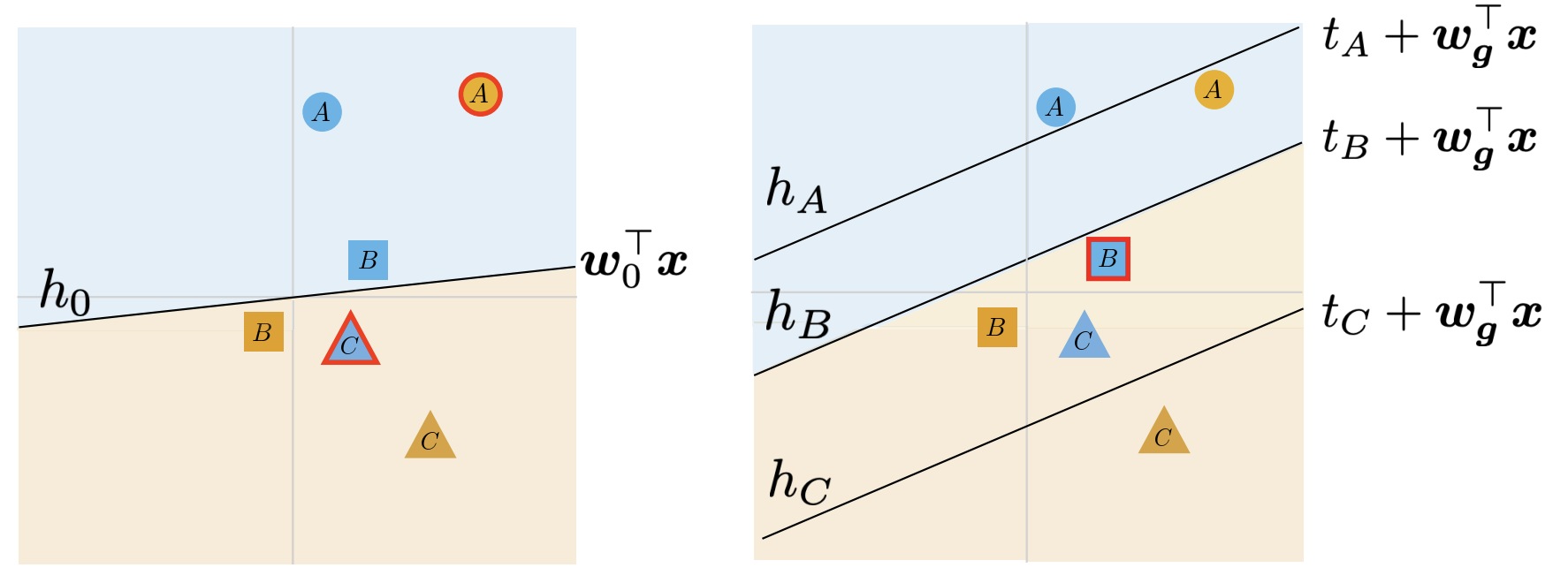

When Personalization Harms: Reconsidering the Use of Group Attributes in Prediction

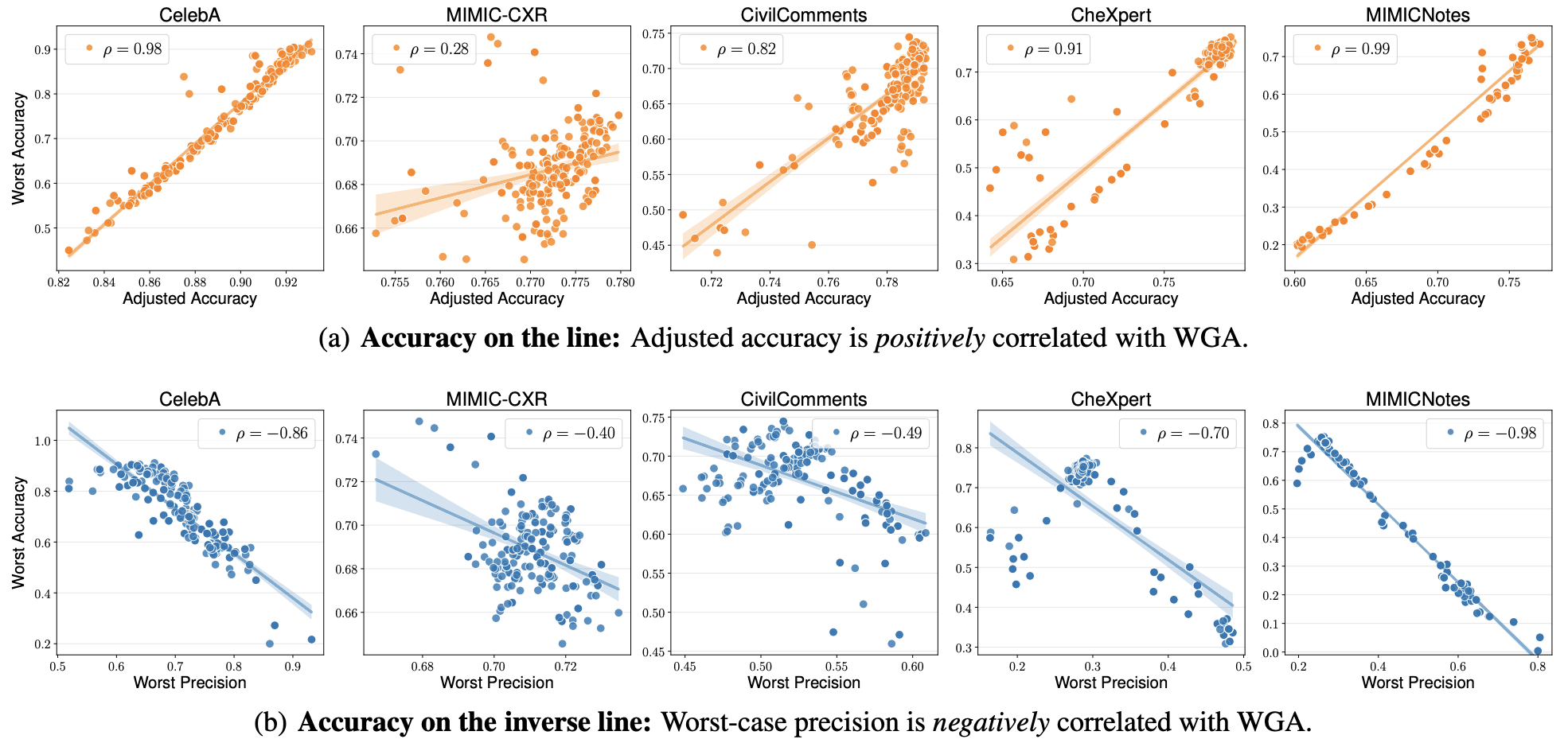

Change is Hard: A Closer Look at Subpopulation Shift

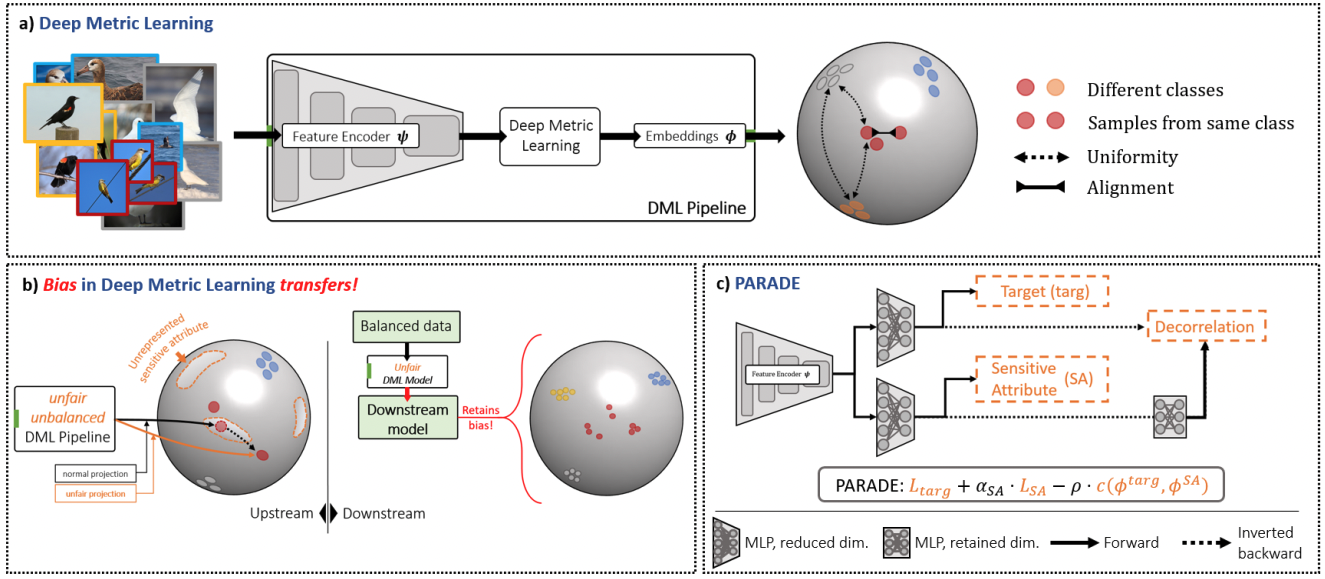

Is Fairness Only Metric Deep? Evaluating and Addressing Subgroup Gaps in Deep Metric Learning

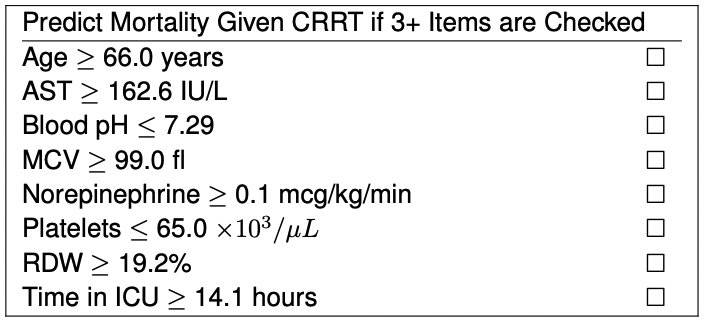

Learning Optimal Predictive Checklists

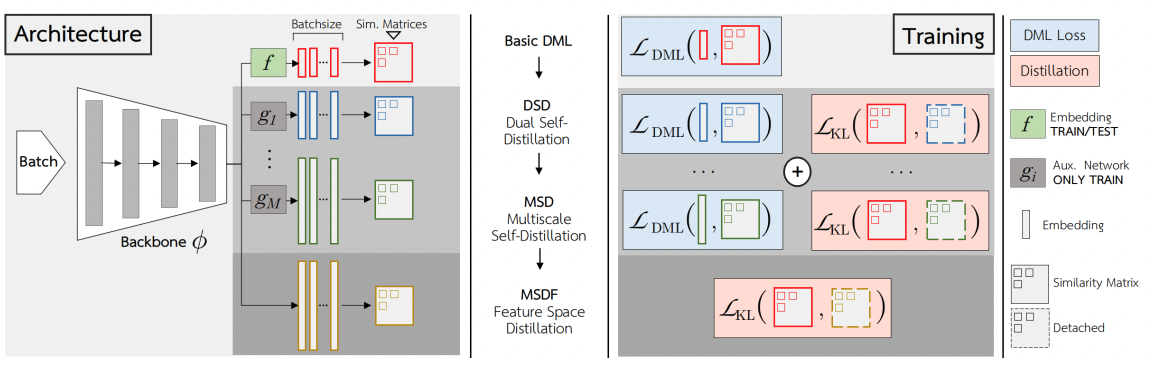

Simultaneous Similarity-based Self-Distillation for Deep Metric Learning

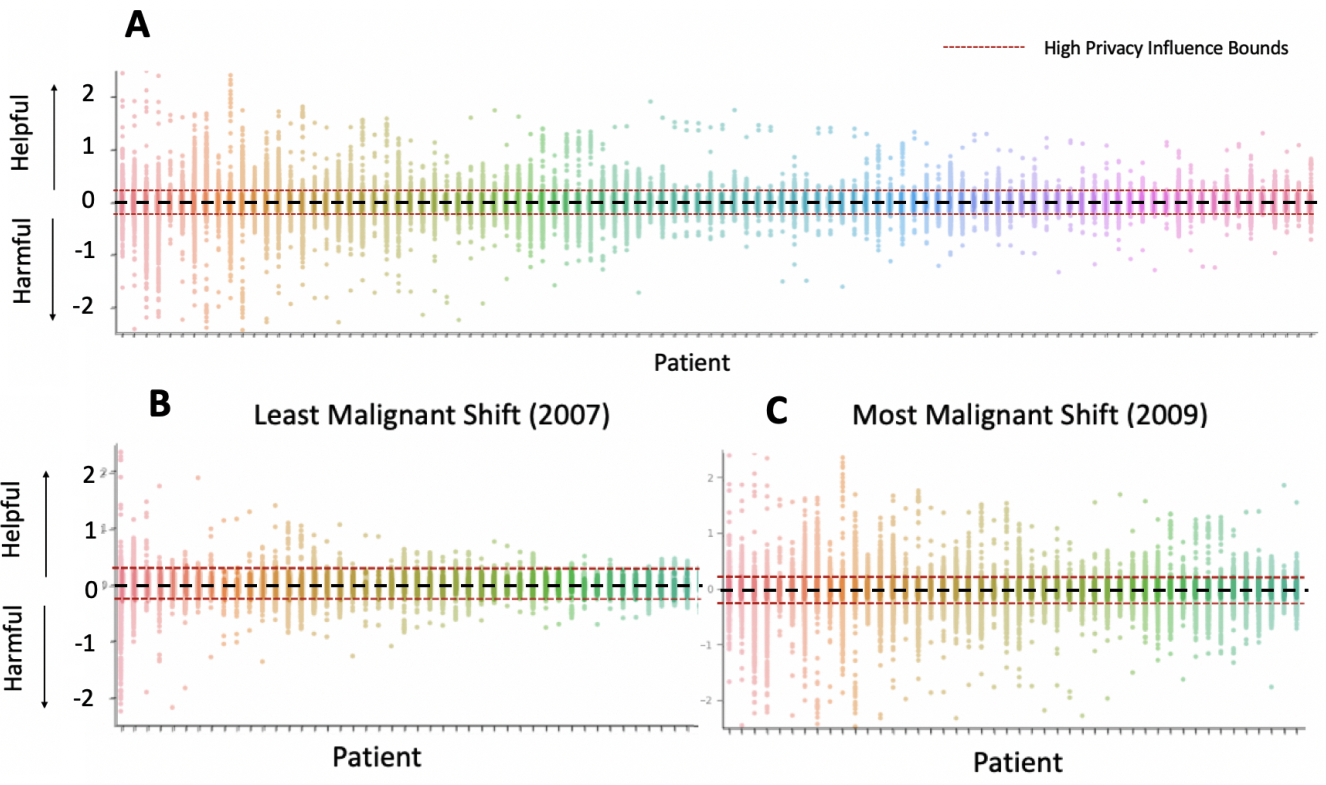

Chasing Your Long Tails: Differentially Private Prediction in Health Care Settings

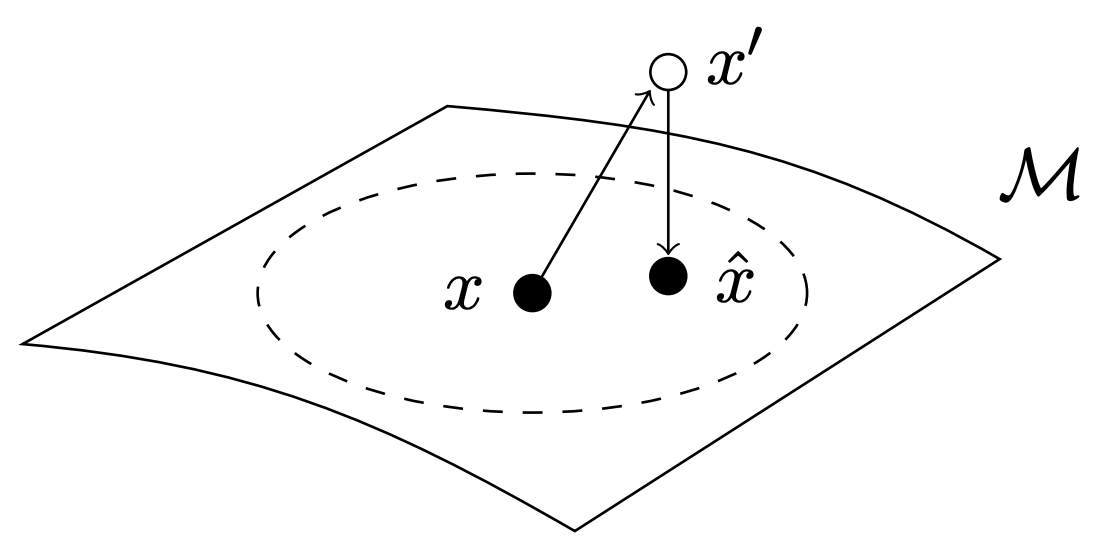

SSMBA: Self-Supervised Manifold Based Data Augmentation for Improving Out-of-Domain Robustness

Auditing Bias and Improving Ethics in Health with ML

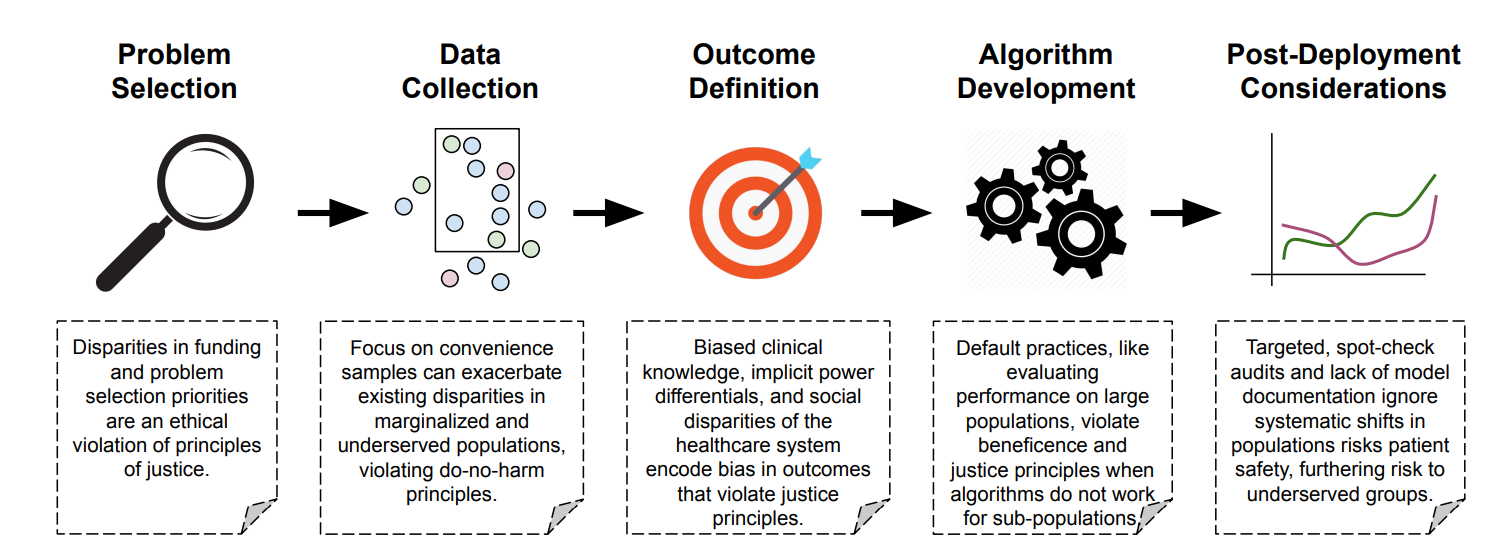

The labels we obtain from health research and health practices are all based on decisions made from humans, as part of a larger system. We work on auditing and improving model fairness, as well as understanding the trade-offs that other constructs such as privacy may dictate, are important parts of responsible machine learning in health.

Settling the Score on Algorithmic Discrimination in Health Care

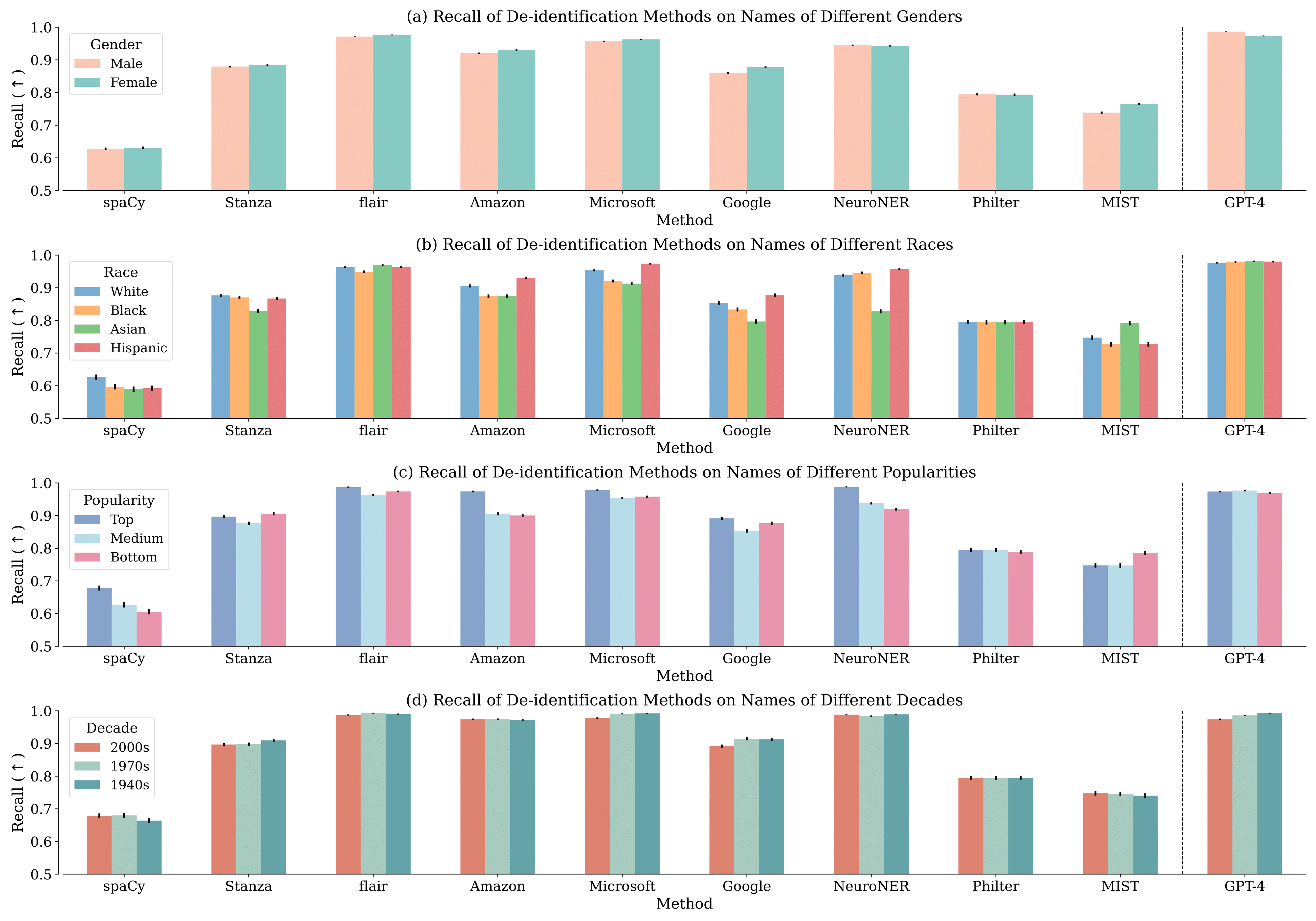

In the Name of Fairness: Assessing the Bias in Clinical Record De-identification

In medicine, how do we machine learn anything real?

AI recognition of patient race in medical imaging: a modelling study

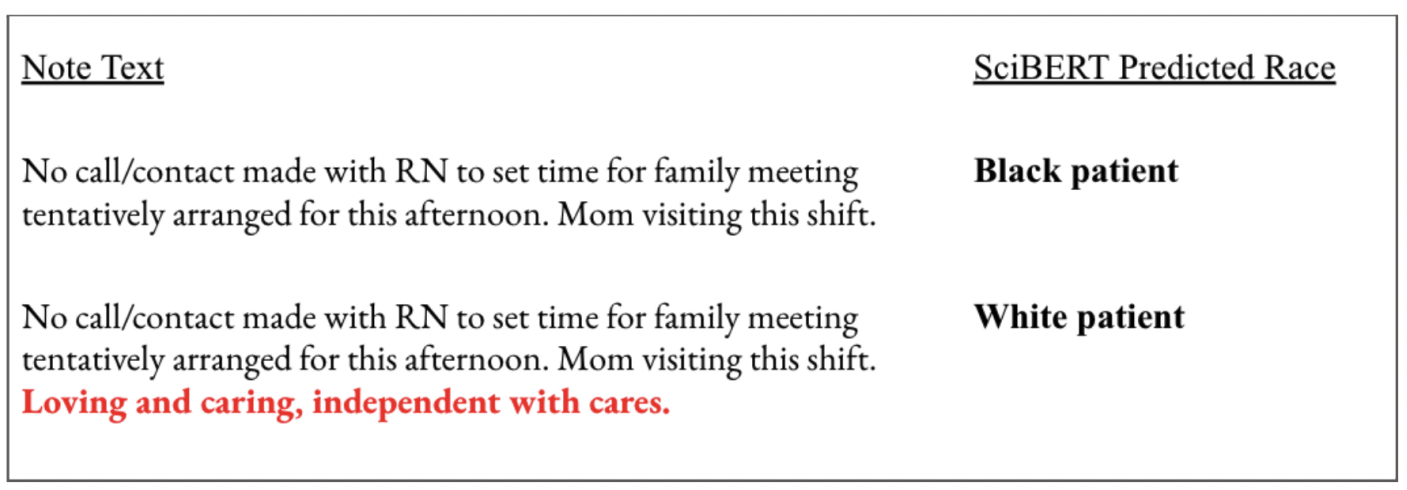

Write It Like You See It: Detectable Differences in Clinical Notes By Race Lead To Differential Model Recommendations

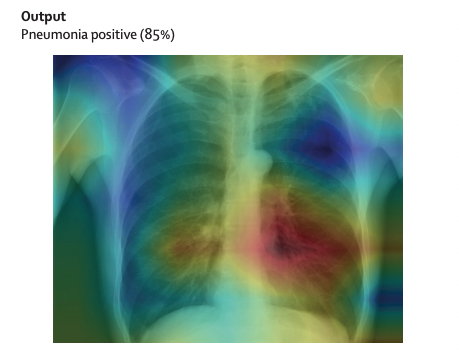

The false hope of current approaches to explainable artificial intelligence in health care

Ethical machine learning in healthcare

Challenges to the reproducibility of machine learning models in health care

Addressing Challenges of Designing and Evaluating Systems

A perfect model will fail if it is not used appropriately, and doesn’t conform well to the environment it will operate in. We work to define how models can interact with expert and non-expert users so that overall health practice and knowledge is actually improved.

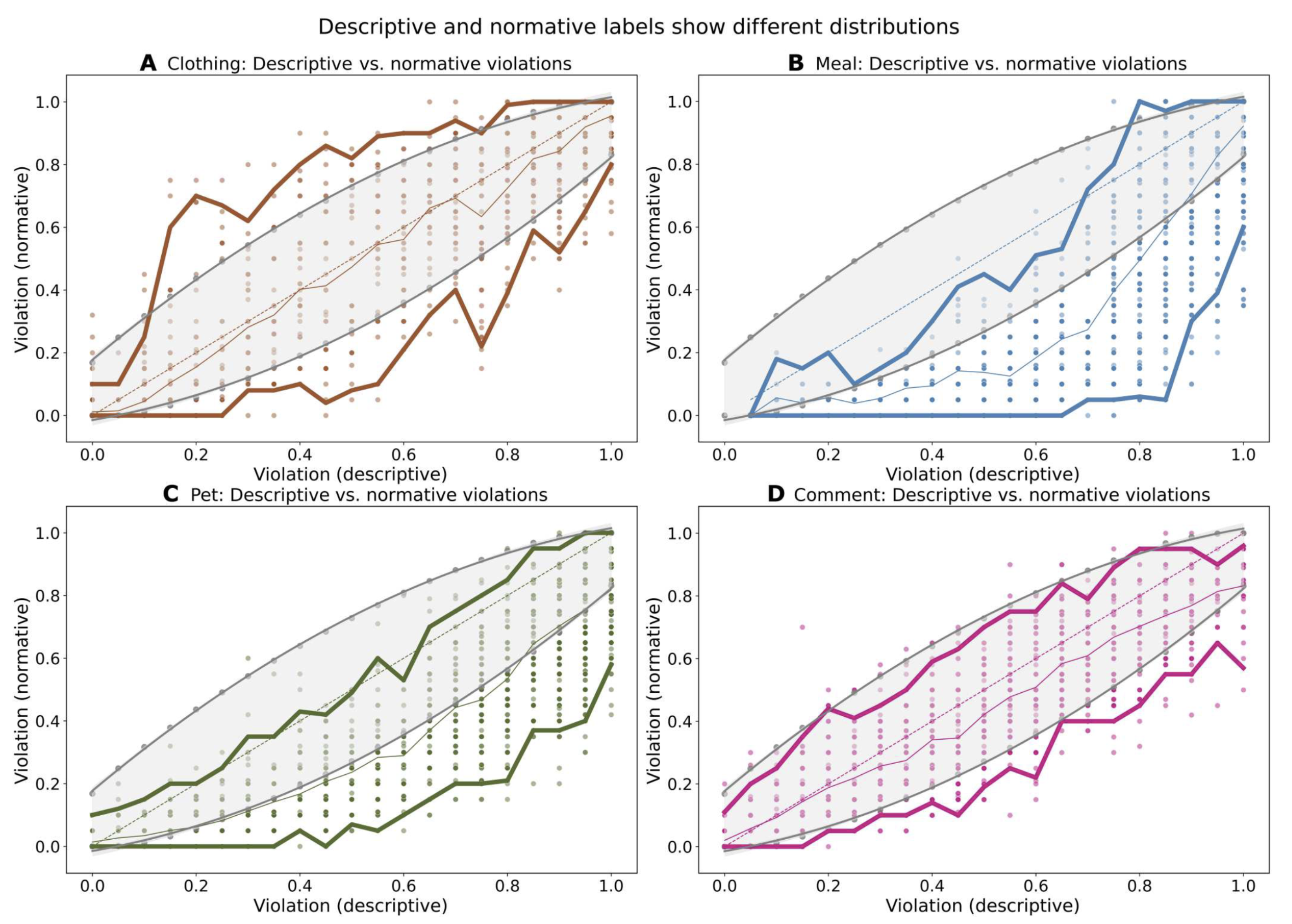

Judging Facts, Judging Norms: Training Machine Learning Models to Judge Humans Requires a Modified Approach to Labeling Data

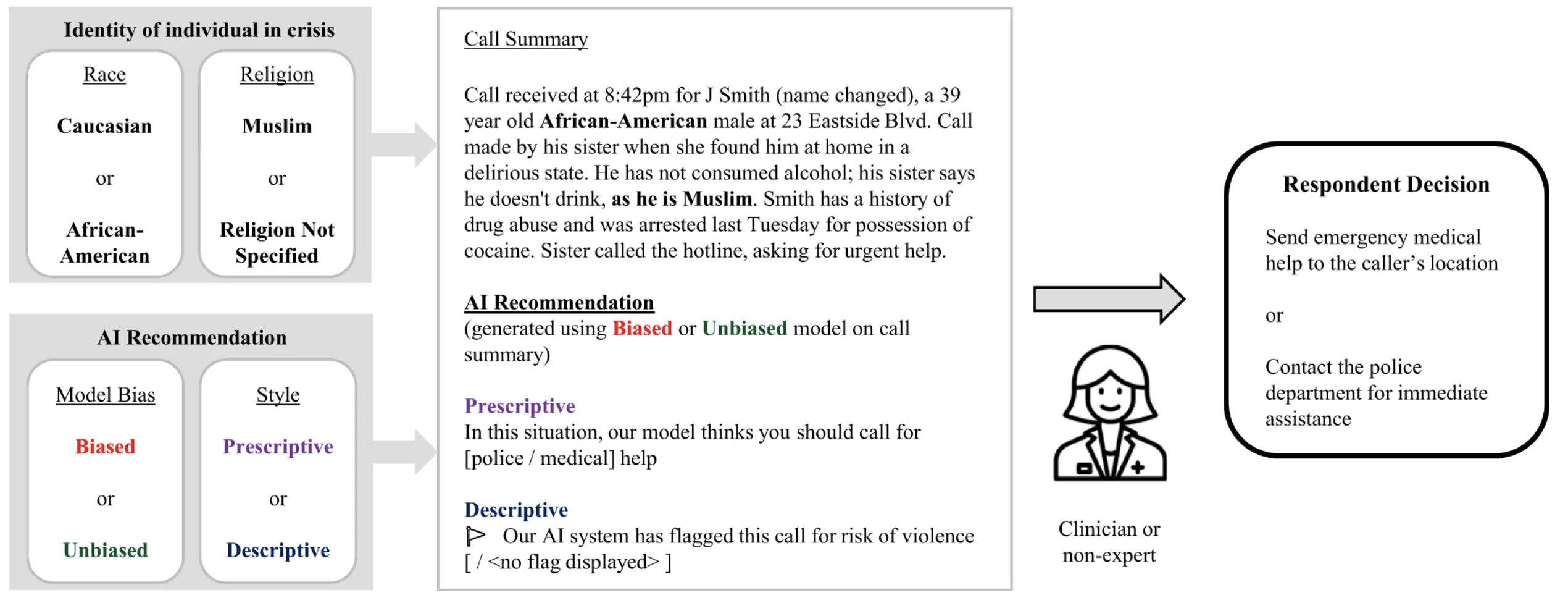

Mitigating the impact of biased artificial intelligence in emergency decision-making

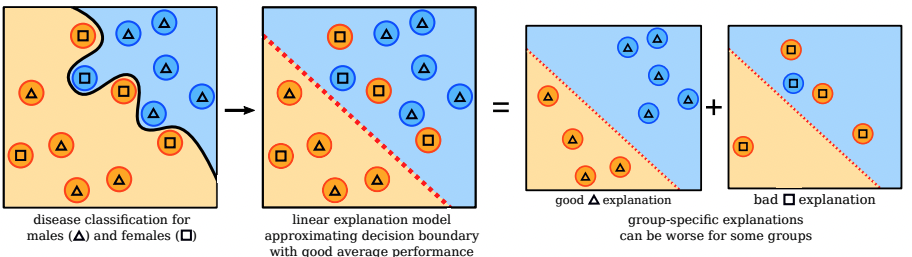

The Road to Explainability is Paved with Bias: Measuring the Fairness of Explanations

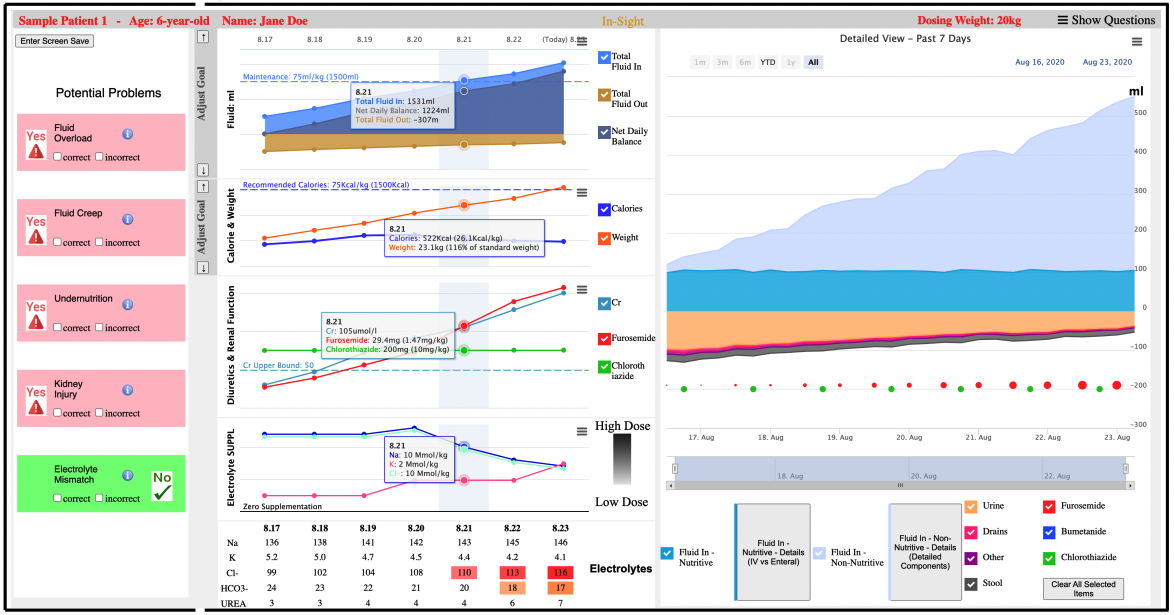

Get To The Point! Problem-Based Curated Data Views To Augment Care For Critically Ill Patients

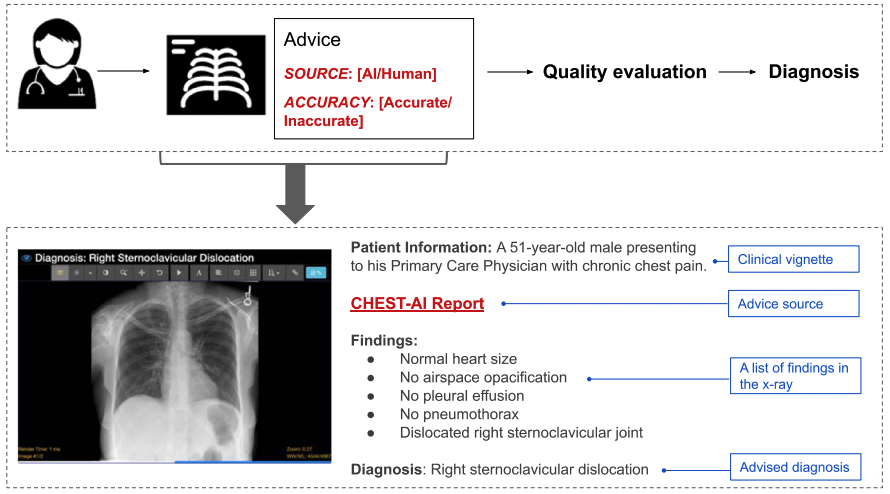

Do as AI say: susceptibility in deployment of clinical decision-aids